News

A Rule Designed To Prevent Killer Robots Is About To Expire & People Are Seriously Worried

If you think drone warfare is a problem, just wait until the killer robots come for us all. No, seriously. One U.S. general is already warning killer robots could grow out of control and attack humanity willy-nilly. Gen. Paul Selva, the country's second-highest ranking military officer, told the Senate Armed Services Committee that the military must keep "the ethical rules of war in place lest we unleash on humanity a set of robots that we don't know how to control," CNN reported.

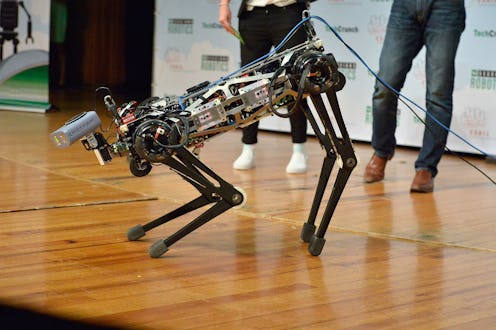

If that sounds like the plot to Terminator, you're right. Evidently this debate is not something far off into the future, at least according to Selva, even if the potential technology is not developed yet. The general was advocating for an extension of the rule that requires a human being make the final decision on whether to kill someone using autonomous weapons systems.

That rule is set to expire in 2017, something that worried Sen. Gary Peters of Michigan, prompting a question to Selva during the general's reconfirmation hearing as vice chairman of the Joint Chiefs of Staff. "I don't think it's reasonable for us to put robots in charge of whether or not we take a human life," Selva responded resolutely.

Even if the technology isn't up to speed, there will be a debate within the Defense Department on "whether or not we take humans out of the decision to take lethal action," Selva argued. Already the Navy has pushed for an increased use of autonomous drones. The general is in favor of maintaining the rule against it; he noted, "we take our values to war," and also focused on the need to follow international law on war — something that a robot is not capable of.

His views are largely in line with a group of scientists and entrepreneurs including Cambridge researcher Stephen Hawking, Tesla and SpaceX founder, Elon Musk, and Apple co-founder Steve Wozniak. They signed an open letter calling for a "ban on offensive autonomous weapons beyond meaningful human control":

Autonomous weapons are ideal for tasks such as assassinations, destabilizing nations, subduing populations, and selectively killing a particular ethnic group. We therefore believe that a military AI arms race would not be beneficial for humanity. There are many ways in which AI can make battlefields safer for humans, especially civilians, without creating new tools for killing people.

One concern is that the United States' adversaries will move ahead on such weaponry, leaving the country's military at a competitive disadvantage. Sen. Peters questioned Selva about that too. He responded that the ban "doesn't mean that we don't have to address the development of those kinds of technologies and potentially find their vulnerabilities and exploit those vulnerabilities."

Defensive plans and technologies make a lot of sense, but be glad for Selva's take on the autonomous offensive robots. That movie never ends well.