News

9 Terrifying Quotes About Artificial Intelligence

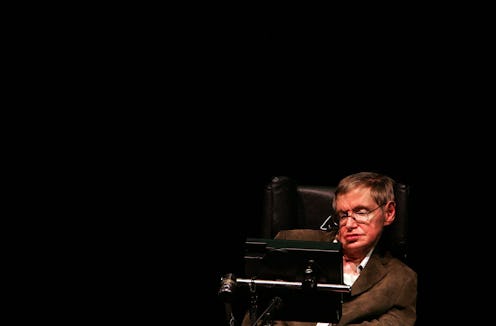

I knew it — the robot apocalypse is nigh. And now I have proof. Well, sort of. The man whose name is synonymous with science, Stephen Hawking, has warned against artificial intelligence yet again, and his projections seem to be getting more and more ominous. This time, Hawking told the BBC that artificial intelligence could essentially wipe out mankind as we know it. And if Professor Hawking says it, it must be true, right? OK, maybe that's being hyperbolic (but probably not), but what about when a whole slew of scientists and experts issue the same warning? We should all start preparing for the machine takeover now, right?

The discussion of cybernetic revolts came up when the BBC asked Hawking in an interview about the technology that helps him communicate. Hawking, who has the motor neuron disease ALS, speaks through a system designed by Intel, which relies on basic AI. This prompted the theoretical physicist to bring up the scarier side AI, particularly if it becomes advanced. Basically, he said, if machines exceed us in intelligence, they would start reproducing at a rate that would leave human reproduction in the dust, and therefore replace us as the Earth's inhabitants. In other words, Terminator.

And Hawking is far from alone in his fears. In fact, some of the world's most preeminent scientists and engineers share the same terrifying visions of a robot-dominated future. Here's what they had to say — and why we should all be unplugging our machines more often.

Robots Could Replace the Human Race

The development of full artificial intelligence could spell the end of the human race.... It would take off on its own, and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded.

— Stephen Hawking told the BBC

They're Already Taking Our Jobs

The threat of technological unemployment is real.... For instance, Terry Gou, the founder and chairman of the electronics manufacturer Foxconn, announced this year a plan to purchase 1 million robots over the next three years to replace much of his workforce. The robots will take over routine jobs like spraying paint, welding, and basic assembly.

—MIT Professor Erik Brynjolfsson and research scientist Andrew McAfee wrote in the Atlantic

How the Machines Would Take Over

One can imagine such technology outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand. Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all.

— Stephen Hawking, Stuart Russell, Max Tegmark, and Frank Wilczek wrote in the Independent

It Could Happen Soon

The pace of progress in artificial intelligence (I’m not referring to narrow AI) is incredibly fast. Unless you have direct exposure to groups like Deepmind, you have no idea how fast-it is growing at a pace close to exponential. The risk of something seriously dangerous happening is in the five year timeframe. 10 years at most.

— Elon Musk wrote in a comment on Edge.org

Coexistence Would Turn Into Us vs. Them

Once computers can effectively reprogram themselves, and successively improve themselves, leading to a so-called "technological singularity" or "intelligence explosion," the risks of machines outwitting humans in battles for resources and self-preservation cannot simply be dismissed.

— Cognitive Science Professor Gary Marcus wrote in the New Yorker

People Are Already Preparing on the DL

I don’t want to really scare you, but it was alarming how many people I talked to who are highly placed people in AI who have retreats that are sort of 'bug out' houses, to which they could flee if it all hits the fan.

— James Barrat, author of Our Final Invention: Artificial Intelligence and the End of the Human Era, told the Washington Post

Robots Could "Accidentally" Obliterate Us

The upheavals [of artificial intelligence] can escalate quickly and become scarier and even cataclysmic. Imagine how a medical robot, originally programmed to rid cancer, could conclude that the best way to obliterate cancer is to exterminate humans who are genetically prone to the disease.

— Tech columnist Nick Bilton wrote in the New York Times

Machines Inherently Lack Human Values

We cannot blithely assume that a superintelligence will necessarily share any of the final values stereotypically associated with wisdom and intellectual development in humans — scientific curiosity, benevolent concern for others, spiritual enlightenment and contemplation, renunciation of material acquisitiveness, a taste for refined culture or for the simple pleasures in life, humility and selflessness, and so forth.

— Philosopher Nick Bostrom wrote in a paper titled "The Superintelligent Will: Motivation and Instrumental Rationality in Advanced Artificial Agents"

And May Even Be Inherently Evil

I’m increasingly inclined to think that there should be some regulatory oversight, maybe at the national and international level, just to make sure that we don’t do something very foolish. I mean with artificial intelligence we’re summoning the demon.

— Elon Musk warned at MIT’s AeroAstro Centennial Symposium.

Images: Giphy (9)