Life

Instagram's Newest Feature Gives People A Chance To Think Twice About Mean Captions

If you’ve been anywhere near the internet, chances are good that you’ve seen or experienced some form of bullying. In an effort to mitigate online harassment, Instagram launched a new anti-bullying feature on Dec. 16 that gives users a chance to rethink potentially mean photo and video captions before posting.

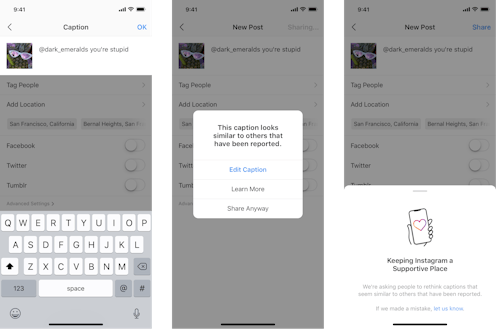

Now, when someone writes a caption on a feed post, Instagram’s AI will detect any language that could potentially be read as “offensive.” If the AI notices offensive language, the user will receive a prompt noting their caption is “similar to those reported for bullying.” Then, the user will be able to edit or delete their caption before it’s posted.

As far as what qualifies as “offensive language,” Instagram says the feature utilizes AI tech that will identify language that's previously violated the platform's Community Guidelines. In other words, if it’s been banned from Instagram before, the AI will flag it.

According to an Instagram press release, the platform also hopes the new feature will be an educational tool. By warning users about their language, it can make them more aware of the platform's rules. The feature will be launched in select countries Monday, including the U.S., and expand globally starting in the new year.

The new feature is an expansion of another anti-bullying Instagram feature rolled out earlier this year. In July 2019, Instagram started allowing people to undo comments that may be offensive or considered bullying. When a user goes to post a potentially-offensive comment, Instagram’s AI will identify it and send a notification to that user asking “are you sure you want to post this?” The notification also includes a note about “Keeping Instagram a Supportive Place” which says, “We’re asking people to rethink comments that seem similar to others that have been reported.”

Unfortunately, online bullying is an all-too-common reality. A 2018 survey on cyberbullying from Pew Research Center found that 59% of teens report experiencing some form of online bullying. Unsurprisingly, young girls, in particular, are more likely to be the targets of online harassment. Despite it being a widespread problem among young people, Pew also reports that a majority of teens don’t think teachers, politicians, and social media companies are doing enough to address the issue.

According to the latest Instagram Info Center post, the platform has seen “promising results” from the comment feature launched earlier in 2019. When given a prompt to rethink a comment, “these types of nudges can encourage people to reconsider their words,” Instagram said. Given these results, the social media platform is expanding the feature to include the language people use in their own post captions.

This new Instagram feature joins a number of anti-bullying efforts from social media companies like Facebook (Instagram’s parent company) and Twitter, but some communities have run into issues with them. For example, Instagram’s caption monitoring potentially poses complications in the realm of groups reclaiming language, turning slurs and hate speech into a purposeful, powerful tool. (Though, it should be noted, you have the option to select that the AI "made an error" in flagging your caption as offensive.)

Simon Tam, band member of The Slants and part of the U.S. Supreme Court on reclaiming offensive language, spoke about his experience arguing for his band's use of the word “slants” as a way to lift up Asian Americans, essentially reclaiming a term often used as a slur against the group. “The act of claiming an identity can be transformational. It can provide healing and empowerment. It can weld solidarity within a community,” Tam wrote in an op-ed about reappropriation for the New York Times. “And, perhaps most important, it can diminish the power of an oppressor, a dominant group.”

Some young people on Instagram see the new feature as growth. “I use Instagram as a creative outlet and to discover new interests. But, since presenting as an LGBTQ person, I've seen that some people post harmful or hurtful captions targeting the community, as well as other marginalized groups,” Rose Erhardt (@rozzzzlyn) said in an emailed press release from Instagram, “Feed Post Caption Warning seems like a great opportunity to give people that little nudge to reconsider their words before posting so that Instagram can be a safe space for everyone.”

At the very least, Instagram’s new anti-bullying feature will be a necessary reminder to rethink the way we talk about ourselves and others.