Books

9 Hollywood Memoirs That Reveal The Dark Side Of The Entertainment Industry

In two and a half months since new broke of Harvey Weinstein's decades of sexual harassment, assault, and abuse, dozens and dozens of stories about sexual misconduct in Hollywood have come to light. While there are plenty of new reporting about the immorality of Tinseltown to read everyday, there even more insightful memoirs that reveal the dark side of Hollywood and its influence in pop culture.

For decades, the Hollywood elite have rested comfortably on pedestals above the legions of fans who obsess over every little thing stars do, from what they wear to how they eat to who they date and beyond. Recently, however, attention has shifted from the trivial details of a famous person's life to their dirtiest secrets as more and more A-listers are revealed to have troubling histories with sexual harassment, assault, and abuse. From Kevin Spacey and Louis C.K. to Nick Carter and Danny Masterson, scores of powerful men have been accused of misconduct, a trend that reveals a more disturbing truth about the industry's systematic abuse of women, and our culture's acceptance of it.

Although the public seems to just be waking up to the dangerous reality of our pervasive rape culture, Hollywood stars have been sharing the real details about the dark side of Tinsel Town since the publication of the earliest celebrity memoirs. In their sometimes juicy, sometime trivial, sometimes eye-opening autobiographies, famous figures aren't afraid to dish about what really goes on behind the scenes of your favorite movies and TV shows, right down to the racism, sexism, bigotry, and abuse.

If the recent news cycle has inspired you to learn about what Hollywood is really like, check out these nine memoirs that reveal the dark side to fame and fortune.

'The Girl: A Life in the Shadow of Roman Polanski' by Samantha Geimer

Samantha Geimer was a 13-year-old aspiring model when, in 1977, Hollywood hotshot Roman Polanski drugged, assaulted, and raped her in the home of actor Jack Nicholson. In The Girl, Geimer reveals in stark detail the events of that night and, more importantly, what came after: a global media frenzy, a public trial and conviction that lead to Polanski fleeing the country. With stark emotion and clarity, Geimer recounts the years of pain and struggle she suffered from as a result of her attack, while Polanski continued to find success and acclaim in the industry. A powerful and important story that is as relevant today as it was when it happened 50 years ago.

'Wishful Drinking' by Carrie Fisher

Though it is rife with humor and wit, Carrie Fisher's bestselling memoir reveals darkness behind the laughter that so often serves as a mask in Hollywood. In Wishful Drinking, the Star Wars megastar uses her personal experience growing up as Hollywood royalty and becoming a famous actress herself to explore the rampant sexism, substance abuse, and scandal in Tinseltown. Sharp and unapologetic, this celebrity memoir is unlike any other, and a must read for fans who want to know what it was really like in the limelight for the dearly departed star.

'Tab Hunter Confidential: The Making of a Movie Star' by Tab Hunder

The news has been filled with stories of women suffering from Hollywood machine, but there are plenty of male stars who are subjected to the same kind of misconduct. In his self-titled memoir, 1950s and 1960s legend Tab Hunter describes his experiences as a closeted gay man in Hollywood whose public life and appearance was controlled and manipulated by the industry for decades. Engaging and honest, Tab Hunter Confidential is a unique and much-needed story that reveals no one is quite safe in Hollywood.

''Tis Herself: An Autobiography' by Maureen O'Hara

You may not know Maureen O'Hara by name, but in the Golden Age of Hollywood, she was one of the brightest stars. Behind her meteoric rise to fame, thanks to her roles in films like The Hunchback of Notre Dame and Jamaica Inn, were tumultuous relationships with directors, producers, and even other actors. She even recounts incidents of abuse from legendary director John Ford. Bold and insightful, 'Tis Herself will take you back to the golden age of Hollywood to reveal the industry has always been tainted with darkness.

'Black Is the New White' by Paul Mooney

In another book that offers as many opportunities to laugh as it does to contemplate the insidious nature of fame and fortune, Black is the New White is Paul Mooney's memoir about his life in comedy, both behind the scenes and on the center stage. For decades, Mooney was a pioneer in comedy, writing jokes for Richard Pryor and shows like Saturday Night Live, but it wasn't all fun and games. As a black man in a predominantly white field, Mooney had to overcome racial barriers in order to not only succeed, but pave the way for black comedians who came after him. A smart and incisive account of the shaping of black comedy, Black is the New White is a must-read for anyone interested in understanding racial issues in comedy.

'Coreyography' by Corey Feldman

In his deeply personal 2013 memoir, child star and teen heartthrob Corey Feldman reveals that behind his rise to fame, darkness loomed. He may have had successful career, famous friendships, and high profile relationships, but Feldman's star-studded life was also full of tragedy. He came from a broken family that he emancipated himself from at age 15; he suffered physical, emotional, and sexual abuse; he was arrested and struggled with drug addiction. With heart and emotion, Coreyography weaves a heartbreaking story of pain and survival that reveals how dark Hollywood can really be.

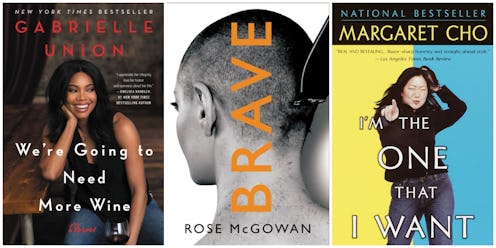

'I'm the One That I Want' by Margaret Cho

Who says you can't be funny when you're dishing dirt about how racist, sexist, and homophobic Hollywood can be? In I'm the One That I Want, comedian, TV star, and public icon Margaret Cho uses her signature humor and snark to talk about what it is like being a bisexual Asian American woman in the entertainment industry. Smart, biting, and empowering, this book is perfect for readers who want to understand the dark side of Hollywood, but need a few jokes to help get them through it without crying to much.

'We're Going to Need More Wine: Stories That Are Funny, Complicated, and True' by Gabrielle Union

If you want some straight-talk about Hollywood, Gabrielle Union is your girl. Her hilarious and honest memoir We're Going to Need More Wine features frank discussions of race, sexuality, gender, and beauty standards in terms of Hollywood and modern womanhood. Moving and thought-provoking, Union's compelling narrative will leave readers feeling more empathetic — and more fired up — than before.

'Brave' by Rose McGowan (Jan. 30)

In her upcoming memoir, outspoken actress and thought leader Rose McGowan reveals the intimate details of a life spent going "from one cult to another." Part autobiography, part manifesto, Brave takes readers behind-the-scenes in McGowan's life and in Hollywood, where she has become known as one of the fiercest and realest women fighting to expose the ugly truth about Tinsel Town and the systematic misogyny that has defined the industry for so long. A revealing look at her remarkable life and a bold call to action, this memoir is sure to be one of 2018 most talked about memoirs.